Looking to Roll Out Redesigned Rubber

MONDAY, FEB 12, 2024

The Goodyear Tire & Rubber Company opened in 1898 as a manufacturer of tires for bicycles and carriages. Over the decades, computer technology has played an increasing role in Goodyear’s evolution. They use Kokkos, an ECP programming model, to adapt their software for exascale systems—which can also help code run efficiently on the upcoming El Capitan system.

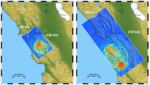

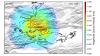

EQSIM and RAJA: Enabling Exascale Predictions of Earthquake Effects on Critical Infrastructure

WEDNESDAY, NOV 15, 2023

Exascale systems are the first HPC machines to provide the size and speed needed to run regional-level, end-to-end simulations of an earthquake event at higher frequencies that are necessary for infrastructure assessments in less time.

A Career Discussion with Matt Rolchigo

WEDNESDAY, NOV 15, 2023

Matt Rolchigo is a computational and materials scientist at Oak Ridge National Laboratory and a researcher on the ECP’s Additive Manufacturing project. He became interested in HPC during his graduate work at Iowa State University when he interned at Lawrence Livermore National Laboratory.

Building a Capable Computing Ecosystem for Exascale and Beyond

FRIDAY, SEP 15, 2023

The exascale computing era is here. ECP collaborations have involved more than 1,000 team members working on 25 different mission-critical applications for research in areas ranging from energy and environment to materials and data science; 70 unique software products; and integrated continuous testing and delivery of ECP products on targeted DOE systems.

A Career Discussion with Tapasya Patki

THURSDAY, SEP 14, 2023

Tapasya Patki is a computer scientist at Lawrence Livermore National Laboratory, the lead investigator in ECP’s Power Steering project, and the co-lead for the ECP’s Argo project. Her group’s work has greatly improved the performance of supercomputers such as El Capitan, Frontier, and Aurora by optimizing energy utilization and power management.

ExaWorks Provides Access to Community Sustained, Hardened, and Tested Components to Create Award-Winning HPC Workflows

THURSDAY, JULY 27, 2023

LLNL contributes to ExaWorks, an Exascale Computing Project (ECP)–funded project that provides access to hardened and tested workflow components through a software development kit (SDK).

LLNL’s Lori Diachin Takes the Helm of DOE’s Exascale Computing Project

WEDNESDAY, MAY 31, 2023

Lawrence Livermore National Laboratory’s Lori Diachin takes over as director of the Department of Energy’s Exascale Computing Project on June 1.

Revisiting the Cancer Distributed Learning Environment (CANDLE) Project

WEDNESDAY, MAY 3, 2023

Podcast: LLNL contributes to CANDLE, an open-source collaboratively developed software platform that provides deep-learning methodologies for accelerating cancer research

WarpX Code Shine at the Exascale Level

TUESDAY, JANUARY 31, 2023

LLNL contributes to the Berkeley-led WarpX project, which is taking plasma accelerator modeling to new heights.

SUNDIALS and hypre: Exascale-Capable Libraries for Adaptive Time-Stepping and Scalable Solvers

TUESDAY, JANUARY 17, 2023

While both libraries have been around for decades, LLNL's SUNDIALS and hypre numerical libraries are seeing renewed algorithmic development with funding from ECP.

Advancing the Additive Manufacturing Revolution

WEDNESDAY, DECEMBER 21, 2022

LLNL contributes to ExaAM, a collection of optimized simulation codes that bring exascale power to predicting how entire metal parts can be 3D printed by industry.

EQSIM Shakes Up Earthquake Research at the Exascale Level

WEDNESDAY, DECEMBER 7, 2022

Earth engineers, seismologists, computer scientists, and applied mathematicians from Berkeley Lab and Livermore Lab form the multidisciplinary, closely integrated team behind EQSIM, which uses physics-based supercomputer simulations to predict the ramifications of an earthquake on buildings and infrastructure and create synthetic earthquake records that can provide much larger analytical datasets than historical, single-event records.

Discovering New Materials at Exascale Speeds

TUESDAY, NOVEMBER 8, 2022

LLNL computer scientists are part of the QMCPACK team, whose efforts have been put toward redesigning the open-source simulation code that promises unmatched accuracy in simulating materials to uncover their hidden properties.

Maintaining the Nation's Power Grid with Exascale Computing

THURSDAY, AUGUST 25, 2022

LLNL contributes to ExaSGD, and ECP effort to develop algorithms and techniques to address new challenges and optimize the grid's response to potential disruption events under a variety of weather scenarios

ECP Launches Programs to Extend DOE’s HPC Workforce Culture

TUESDAY, AUGUST 2, 2022

LLNL joins the broader ECP community to to address workforce needs in high-performance computing (HPC) at the DOE’s array of labs.

Enabling Cross-Project Research to Strengthen Math Libraries for Scientific Simulations

TUESDAY, MAY 3, 2022

An ECP podcast delves into math libraries, including MFEM, a Livermore-developed open-source C++ language library for finite element methods.

The Flux Supercomputing Workload Manager: Improving on Innovation and Planning for the Future

THURSDAY, FEB 24, 2022

LLNL computer scientists have devised an open-source, modular, fully hierarchical software framework called Flux that manages and schedules computing workflows to use system resources more efficiently and provide results faster.

Let’s Talk Exascale Code Development: Cancer Distributed Learning Environment (CANDLE)

TUESDAY, DEC 7, 2021

A podcast focusing on the computer codes used in a project called CANDLE (CANcer Distributed Learning Environment), which is addressing three significant science challenge problems in cancer research. LLNL is a collaborator on CANDLE.

Exascale Day Article: Early Access Systems at LLNL Mark Progress Toward El Capitan

FRIDAY, OCT 15, 2021

Though the arrival of the exascale supercomputer El Capitan at Lawrence Livermore National Laboratory is still almost two years away, teams of code developers are busy working on predecessor systems to ensure critical applications are ready for Day One.

Exascale Day Article: Powering Up: LLNL Prepares for Exascale with Massive Energy and Water Upgrade

FRIDAY, OCT 15, 2021

A supercomputer doesn’t just magically appear, especially one as large and as fast as Lawrence Livermore National Laboratory’s upcoming exascale-class behemoth El Capitan.

The Flux Software Framework Manages and Schedules Modern Supercomputing Workflows

MONDAY, SEP 13, 2021

A podcast delving into a software framework developed at Lawrence Livermore National Laboratory (LLNL), called Flux, which is widely used around the world. Flux enables science and engineering work that couldn’t be done before.

Exascale Computing Project Portfolio Presentation Videos

SATURDAY, MAY 15, 2021

Included among the project presentations is LLNL’s Kathryn Mohror’s talk on NNSA Software Technology.

Workflow Technologies Impact SC20 Gordon Bell COVID-19 Award Winner and Two of the Three Finalists

MONDAY, DECEMBER 21, 2020

LLNL’s Dan Laney discusses the incorporation of ExaWorks technologies into the workflows of the recent SC20 Gordon Bell COVID-19 submissions.

An Overview of the Exascale Computing Project

WEDNESDAY, SEPTEMBER 30, 2020

LLNL’s Lori Diachin (ECP Deputy Director) provides an overview of the DOE’s Exascale Computing Project for attendees of the Computing Directorate’s Virtual Expo.

Science at Exascale: The Art of Dealing with the Tension Between More and Less Data

SUNDAY, OCTOBER 11, 2020

LLNL’s Kathryn Mohror, Adam Moody, and Greg Kosinovsky (with Argonne collaborators) discuss the ECP VeloC-SZ project and two software technologies that support user needs concerning their scientific data.

Collaboration at Exascale

FRIDAY, OCTOBER 9, 2020

Ulrike Meier Yang provides a blog post about the advantages of working together to deliver advanced software and the necessary technical and scientific components.

Project Will Enhance Livermore Computing Center in Preparation for Exascale

THURSDAY, JUNE 25, 2020

LLNL recently announced that it had broken ground on its Exascale Computing Facility Modernization project, which will significantly expand the power and cooling capacity of the Livermore Computing Center to get it ready for next-generation exascale supercomputing hardware.

Major Update of the MFEM Finite Element Library Broadens GPU Support

MONDAY, JUNE 8, 2020

An interview with Tzanio Kolev about The Center for Efficient Exascale Discretizations (CEED) and the recently released version 4.1 of its MFEM finite element library, which introduces features important for the nation’s first exascale supercomputers.

Flexible Package Manager Automates the Deployment of Software on Supercomputers

TUESDAY, APRIL 28, 2020

A podcast with Todd Gamblin, a computer scientist in the Center for Applied Scientific Computing at Lawrence Livermore National Laboratory (LLNL) and the creator of Spack, the deployment tool for the Exascale Computing Project’s (ECP) software stack and winner of a 2019 R&D 100 Award.

Reducing the Memory Footprint and Data Movement on Exascale Systems

THURSDAY, APRIL 16, 2020

A podcast with Peter Lindstrom, a computer scientist in the Center for Applied Scientific Computing at Lawrence Livermore National Laboratory (LLNL). Lindstrom manages all activities related to the ZFP project, which has been folded into ECP’s ALPINE in-situ visualization project. ZFP is a compressed representation of multidimensional floating-point arrays that are ubiquitous in high-performance computing.

Delivering Exascale Machine Learning Algorithm and Tools for Scientific Research

THURSDAY, MARCH 12, 2020

A podcast, including an interview with LLNL’s Brian Van Essen, who has implemented surrogate models using deep neural networks and the Sierra and Summit resources.

Optimizing Math Libraries to Prepare Applications for Exascale Computing

THURSDAY, JANUARY 2, 2020

A podcast featuring LLNL’s Ulrike Meier Yang, principal investigator of the xSDK4ECP project and lead of hypre’s ECP effort. Her expertise spans more than three decades in numerical algorithms, particularly linear system solvers, parallel computing, high-performance computing, and scientific software design.

R&D100 Award-Winning Software Enables I/O Performance Portability and Code Progress

FRIDAY, DECEMBER 6, 2019

A podcast featuring two LLNL researchers from the Exascale Computing Project (ECP) involved in the development of Scalable Checkpoint/Restart (SCR) Framework 2.0, Elsa Gonsiorowski and Kathryn Mohror, discuss what SCR is and does, the challenges involved in creating it, and the impact it is expected to have in high-performance computing.

Software Enables Use of Distributed In-System Storage and Parallel File System

THURSDAY, DECEMBER 5, 2019

LLNL’s Kathryn Mohror contributes to a podcast discussing UnifyFS and how it can provide I/O performance portability for applications, enabling them to use distributed in-system storage and the parallel file system.

First Annual Exascale Day Celebrates Discoveries on the Fastest Supercomputers in the World

WEDNESDAY, OCTOBER 2, 2019

LLNL is participating in the new National Exascale Day, a registered holiday to be celebrated annual on October 10.

Experts Weigh in on the Game-Changing Nature of Exascale Computing

SUNDAY, AUGUST 25, 2019

LLNL’s Lori Diachin is one of three experts featured in a Q&A by writer Mae Rice from the social network and blogging platform called Built In.

CANDLE Illuminates New Pathways in Fight Against Cancer

WEDNESDAY, AUGUST 14, 2019

An article on the U.S. Department of Energy (DOE) website written by Andrea Peterson takes a look at the CANcer Distributed Learning Environment (CANDLE), a cross-cutting initiative of the Joint Design of Advanced Computing Solutions for Cancer (JDACS4C) collaboration that is supported by DOE’s Exascale Computing Project.

Cray Wins NNSA-Livermore ‘El Capitan’ Exascale Contract

TUESDAY, AUGUST 13, 2019

DOE, NNSA, and LLNL have announced the signing of contracts with Cray Inc. to build the NNSA’s first exascale supercomputer, "El Capitan." El Capitan will have a peak performance of more than 1.5 exaflops (1.5 quintillion calculations per second) and an anticipated delivery in late 2022.

Robustly Delivering Highly Accurate Computer Simulations of Complex Materials

THURSDAY, JULY 18, 2019

LLNL is part of a team developing a QMC methods software named QMCPACK to find, predict, and control materials and properties at the quantum level. The ultimate aim is to achieve an unprecedented and systematically improvable accuracy by leveraging the memory and power capabilities of the forthcoming exascale computing systems.

CEED’s Impact on Exascale Computing Project (ECP) Efforts is Wide-Ranging

WEDNESDAY, JULY 17, 2019

The Center for Efficient Exascale Discretizations (CEED) is a research partnership involving more than 30 computational scientists from two DOE labs (Lawrence Livermore and Argonne) and five universities (the University of Illinois at Urbana-Champaign, Virginia Tech, the University of Colorado Boulder, the University of Tennessee, and Rensselaer Polytechnic Institute). Led by LLNL’s Tzanio Kolev, CEED is helping applications leverage future architectures by developing state-of-the-art discretization algorithms that better exploit the hardware and deliver a significant performance gain over conventional methods.

Offering More Detailed Simulations by Solving Problems Faster and at Larger Scales

TUESDAY, MAY 21, 2019

An LLNL team is preparing hypre to take advantage of the capabilities of the upcoming exascale computing systems. Specifically, the hypre project is delivering scalable performance on massively parallel computer architectures to positively impact a variety of applications that need to solve linear systems.

LLNL's Lori Diachin Receives High Performance Computing Industry Publication Honor

MONDAY, FEBRUARY 25, 2019

Lori Diachin, Computing's deputy associate director for science and technology, has been named one of the "People to Watch" in High Performance Computing (HPC) for 2019 by HPCwire, the online news service covering supercomputing. Diachin, who has served in numerous leadership positions in LLNL's Computing Directorate, is the deputy director for the Department of Energy's Exascale Computing Project (ECP).

Demonstrating In-Situ Computer Simulation Visualization and Analysis on Sierra

TUESDAY, JANUARY 8, 2019

ALPINE Ascent is the first in-situ visualization and analysis tool meant for next-generation supercomputers, said Cyrus Harrison of Lawrence Livermore National Laboratory (LLNL) and the Department of Energy’s Exascale Computing Project (ECP). ALPINE Ascent is referred to as a flyweight in-situ visualization and analysis library for ECP applications.

ECP Receives HPCwire Editors’ Choice Award for Best HPC Collaboration of Government, Academia, and Industry

TUESDAY, NOVEMBER 13, 2018

The U.S. Department of Energy’s (DOE) Exascale Computing Project (ECP) announced it has been recognized by HPCwire with an Editor’s Choice Award for the project’s extensive collaborative engagement with government, academia and industry in support of the ECP’s effort to accelerate delivery of a capable exascale computing ecosystem, as the nation prepares for the next era of supercomputers capable of a quintillion operations per second.

Spack: The Deployment Tool for ECP’s Software Stack

FRIDAY, OCTOBER 26, 2018

Audio chat with Todd Gamblin, a computer scientist in the Center for Applied Scientific Computing at Lawrence Livermore National Laboratory (LLNL). His research focuses on scalable tools for measuring, analyzing, and visualizing parallel performance data. He leads LLNL’s DevRAMP (Reproducibility, Analysis, Monitoring, and Performance) team, and he is the creator of Spack, the deployment tool for the Exascale Computing Project’s (ECP) software stack.

Exascale Computing Project Names Lori Diachin as New Deputy Director

TUESDAY, JULY 31, 2018

The Department of Energy’s Exascale Computing Project (ECP) has named Lori Diachin as its new Deputy Director effective August 7, 2018. Lori replaces Stephen Lee who has retired from Los Alamos National Laboratory. Lori has been serving as the Deputy Associate Director for Science and Technology in the Computation Directorate at Lawrence Livermore National Laboratory (LLNL) since 2017.

ECP Announces New Co-Design Center to Focus on Exascale Machine Learning Technologies

FRIDAY, JULY 20, 2018

The Exascale Computing Project has initiated its sixth Co-Design Center, ExaLearn, to be led by Principal Investigator Francis J. Alexander, Deputy Director of the Computational Science Initiative at the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory. ExaLearn is a co-design center for Exascale Machine Learning (ML) Technologies and is a collaboration initially consisting of experts from eight multipurpose DOE labs. Brian Van Essen is the LLNL Lead.

Scaling the Unknown: The CEED Co-Design Center

THURSDAY, JULY 12, 2018

CEED is one of five co-design centers in the Exascale Computing Project, a collaboration between DOE’s Office of Science and National Nuclear Security Administration. The centers facilitate cooperation between the ECP’s supercomputer vendors, application scientists and hardware and software specialists. Led by Livermore, the CEED collaboration alone encompasses more than 30 researchers at two DOE national laboratories – LLNL and Argonne – and five universities.

New Simulations Break Down Potential Impact of a Major Quake by Building Location and Size

THURSDAY, JUNE 28, 2018

With unprecedented resolution, scientists and engineers are simulating precisely how a large-magnitude earthquake along the Hayward Fault would affect different locations and buildings across the San Francisco Bay Area. A team from Berkeley Lab and Lawrence Livermore National Laboratory, both U.S. Department of Energy (DOE) national labs, is leveraging powerful supercomputers to portray the impact of high-frequency ground motion on thousands of representative different-sized buildings spread out across the California region.

Audio Update: Hardware and Integration

TUESDAY, MAY 15, 2018

In an audio discussion, ECP’s Hardware and Integration (HI) Director Terri Quinn (Lawrence Livermore National Laboratory) describes how the HI focus area performs its mission and what its top goals are.

Efficiently Using Power and Optimizing Performance of Scientific Applications at Exascale

THURSDAY, MARCH 15, 2018

Tapasya Patki of Lawrence Livermore National Laboratory leads the Power Steering project within the ECP. Her project provides a job-level power management system that can optimize performance under power and/or energy constraints. She has expertise in the areas of power-aware supercomputing, large-scale job scheduling, and performance modeling.